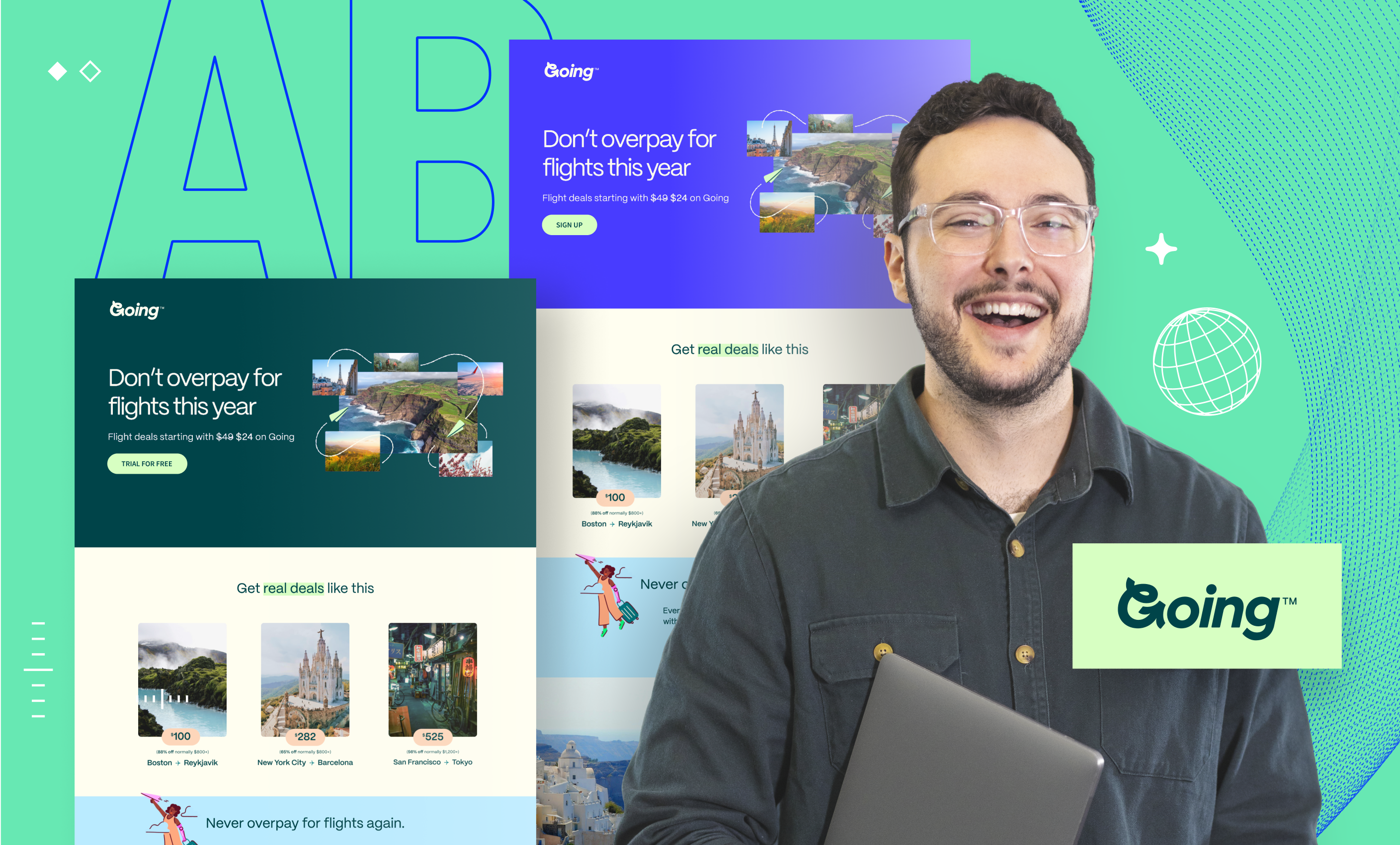

Josh Gallant

Josh is the founder of Backstage SEO, an organic growth firm that helps SaaS companies capture demand. He’s a self-proclaimed spreadsheet nerd by day, volunteer soccer coach on weekends, and wannabe fantasy football expert every fall.

» More blog posts by Josh Gallant

Michael Aagaard

Michael Aagaard has been working full time with CRO since 2008. He has helped companies all over the world, including Unbounce, improve their online businesses. Michael’s approach to CRO revolves around research and consumer psychology. He is a sought-after international keynote speaker and is generally known as one of the most passionate and enthusiastic people in the industry. Get in touch with Michael via http://michaelaagaard.com.

» More blog posts by Michael Aagaard

In this article, you’ll learn:

- What makes a good A/B testing hypothesis

- The components of an A/B testing hypothesis

- Best practices for better hypotheses

- How you can start testing with confidence

Ready? Let’s dig in.

What is a hypothesis in A/B testing?

An A/B testing hypothesis is a clear, testable statement predicting how changes to a landing page or element will impact user behavior. It guides the experiment by defining what you’re testing and the expected outcome, helping determine if the changes improve metrics like conversions or engagement.

Zooming out a bit, the actual dictionary definition of a hypothesis is “a supposition or proposed explanation made on the basis of limited evidence as a starting point for further investigation.”

Wordy…

Put simply, it’s an educated guess used as a starting point to learn more about a given subject.

In the context of landing page and conversion rate optimization, a test hypothesis is an assumption you base your optimized test variant on. It covers what you want to change on the landing page and what impacts you expect to see from that change.

Running an A/B test lets you examine to what extent your assumptions were correct, see whether they had the expected impact, and ultimately get more insight into the behavior of your target audience.

Formulating a hypothesis will help you challenge your assumptions and evaluate how likely they are to affect the decisions and actions of your prospects.

In the long run this can save you a lot of time and money and help you achieve better results.

What are the key components of an A/B testing hypothesis?

Now that you’ve got a clear definition of what an A/B testing hypothesis is and why it matters, let’s look at the ins and outs of putting one together for yourself.

In general, your hypothesis will include three key components:

- A problem statement

- A proposed solution

- The anticipated results

Let’s quickly explore what each of these components involves.

The problem statement

Your first task: Ask yourself why you’re running a test.

In order to formulate a test hypothesis, you need to know what your conversion goal is and what problem you want to solve by running the test—basically, the “why” of your test.

So before you start working on your test hypothesis, you first have to do two things:

- Determine your conversion goal

- Identify a problem and formulate a problem statement

Once you know what your goal is and what you presume is keeping your visitors from realizing that goal, you can move on to the next step of creating your test hypothesis.

The proposed solution

Your proposed solution is the bread and butter of your hypothesis. This is the “how” portion of your test, and will outline the steps you take to run and achieve your test.

Basically, if you’re looking at an A/B test, start thinking of the elements you want to test and how those will support you reaching your goal.

The anticipated results

This is the final component of a good A/B test hypothesis and is your educated guess at what results you anticipate your test delivering.

Basically, you’re trying to predict what the test will achieve—but it’s important to remember you don’t need to worry about accuracy here. Obviously, you don’t want your results to be a total surprise, but you’re just trying to outline a ballpark idea of what the test will achieve.

Putting these three components together is how you develop a strong hypothesis, outlining the why, how, and what that will shape your test.

How to generate a hypothesis for multiple tests

Generating a hypothesis for multiple A/B tests, or even multivariate tests, follows the same logic and process as a single test. You identify a problem, propose a solution, and predict your results.

The challenge in this instance, though, is that it can be really tempting to create a hypothesis and try to apply it to multiple different variables on a landing page. If you’re testing multiple things at once, it’s harder to determine what’s causing the results.

But that doesn’t mean you don’t have options.

Multivariate testing allows you to compare multiple variables across multiple pages (not just the single variable over two different pages you get with an A/B test). These tests are a bit more complicated than an A/B test, but they can still deliver incredible insights.

Generating a hypothesis for a multivariate test follows the same principles as it always does, but you need to pay closer attention to how altering multiple elements on one or more pages will impact your results.

Make sure to track those variables closely to ensure you can measure effectiveness.

A/B testing hypothesis examples

Let’s look at a real-world example of how to put it all together.

Let’s say there’s a free ebook that you’re offering to the readers on your blog:

Image courtesy ContentVerve

Data from surveys and customer interviews suggests you have a busy target audience who don’t have a lot of spare time to read ebooks, and the time it takes to read the thing could be a barrier that keeps them from it.

With this in mind, your conversion goal and problem statement would look something like this:

- Conversion goal: ebook downloads

- Problem statement: “My target audience is busy, and the time it takes to read the ebook is a barrier that keeps them from downloading it.”

With both the conversion goal and problem statement in place, it’s time to move on to forming a hypothesis on how to solve the issue set forth in the problem statement.

The ebook can be read in just 25 minutes, so maybe you can motivate more visitors to download the book by calling out that it’s a quick read—these are your expected results.

Now say you’ve got data from eye-tracking software that suggests the first words in the first bullet point on the page attract more immediate attention from visitors. This information might lead you to hypothesize that the first bullet is thus the best place to address that time issue.

If you put the proposed solution and expected results together, you get the following A/B test hypothesis:

“By tweaking the copy in the first bullet point to directly address the ‘time issue’, I can motivate more visitors to download the ebook.”

Great! Now, it’s time to work on putting your hypothesis to the test. To do this, you would work on the actual treatment copy for the bullet point.

Image courtesy ContentVerse

Remember: until you test your hypotheses, they will never be more than hypotheses. You need reliable data to prove or disprove the validity of your hypotheses. To find out whether your hypothesis will hold water, you can set up an A/B test with the bullet copy as the only variable.

6 best practices for making a winning hypothesis

Working with test hypotheses provides you with a much more solid optimization framework than simply running with guesses and ideas that come about on a whim.

But remember that a solid test hypothesis is an informed solution to a real problem—not an arbitrary guess. The more research and data you have to base your hypothesis on, the better it will be.

To that end, let’s look at six best practices you can follow for making a winning A/B test hypothesis:

1. Be clear and specific

The more specific you can get with your A/B test hypothesis, the stronger it will be.

When you’re developing your hypothesis, avoid any vague statements. Make sure your hypothesis clearly states the change you’re making and the outcome you expect to see.

As an example, a statement like “changing the button color will increase clicks” is broad and vague. Instead, try specifying the color you’re changing your CTA button to and creating a clearer estimate on how much you think clicks will increase by.

Your amended statement could look something like: “Changing the CTA button to blue and updating the copy will increase the amount of clicks by 20%.”

2. Focus on a single variable

A/B testing is all about comparing the performance of a single variable across two versions. Introducing more variables changes the nature of the test and thus the nature of your hypothesis.

Don’t muddy the waters by trying to do too much in one test. This will make it much harder to spot what change led to any observed effect, let alone whether it confirmed your hypothesis.

Remember, you can always continue to test additional variables—pick one to start and focus on getting results there before you dig in elsewhere.

If you’re not sure where to start, we put together a list of 18 elements on a landing page you can test to help you get started.

3. Base it on data and quantifiable insights

Like we said above, the more data you have, the better. Any hypothesis you develop should be based on quantifiable data and insights to get the most out of your test.

Google Analytics, customer interviews, surveys, heat maps and user testing are just a handful of examples of valuable data sources that will help you gain insight on your target audience, how they interact with your landing page, and what makes them tick.

Look at past data, user feedback, and market research to inform your hypothesis. This ensures that your experiment is grounded in reality and increases the likelihood of meaningful results.

As an example, if previous data suggests that users prefer blue buttons, your hypothesis might be that changing the button color from green to blue will increase engagement.

Not sure what data you should be focusing on? Here are a few metrics and KPIs to keep in mind:

- Bounce rate

- Conversion rate

- Form abandonment rate

- Time on page

- Page load time

- Exit rate

- Click-through rate

4. Make sure it’s testable

You need to be able to measure the results of your test. Defining specific metrics and KPIs—such as click-through rates and especially conversion rate—is a great way to make sure what you’re doing is testable and measurable.

If the best practices you’ve read to this point are sounding familiar, that’s because they broadly align with the concept of SMART goals—that is, making sure that your hypothesis is specific, measurable, achievable, relevant, and timely.

Making sure your hypothesis is clear and testable will also ensure you can easily interpret your results, removing or reducing the risk of subjective interpretation.

5. Don’t ignore context

How does your test fit into your broader conversion rate optimization goals and strategies? As much as you’re hypothesizing about the specific results of your test, a strong hypothesis also acknowledges how your experimentation will benefit your efforts at large.

Whenever you’re developing a hypothesis, consider other potential interactions with elements on your website, your email marketing, or even the products and services you’re offering. Changing an element in one location may have different effects depending on where it sits in the overall buyer’s journey.

6. Keep it realistic

The final best practice to keep in mind when developing a strong A/B test hypothesis is to aim high but keep a level head—no matter how ambitious you may be, it’s important to keep your expectations realistic and grounded.

An overly ambitious goal could lead to disappointment at best and missed targets at the worst. This is why it’s critical to benchmark and refer to past performance.

Even so, that doesn’t mean you need to be constantly doubting your efforts. Just because you’re being realistic about your expectations doesn’t mean you have to scale down tests and initiatives.

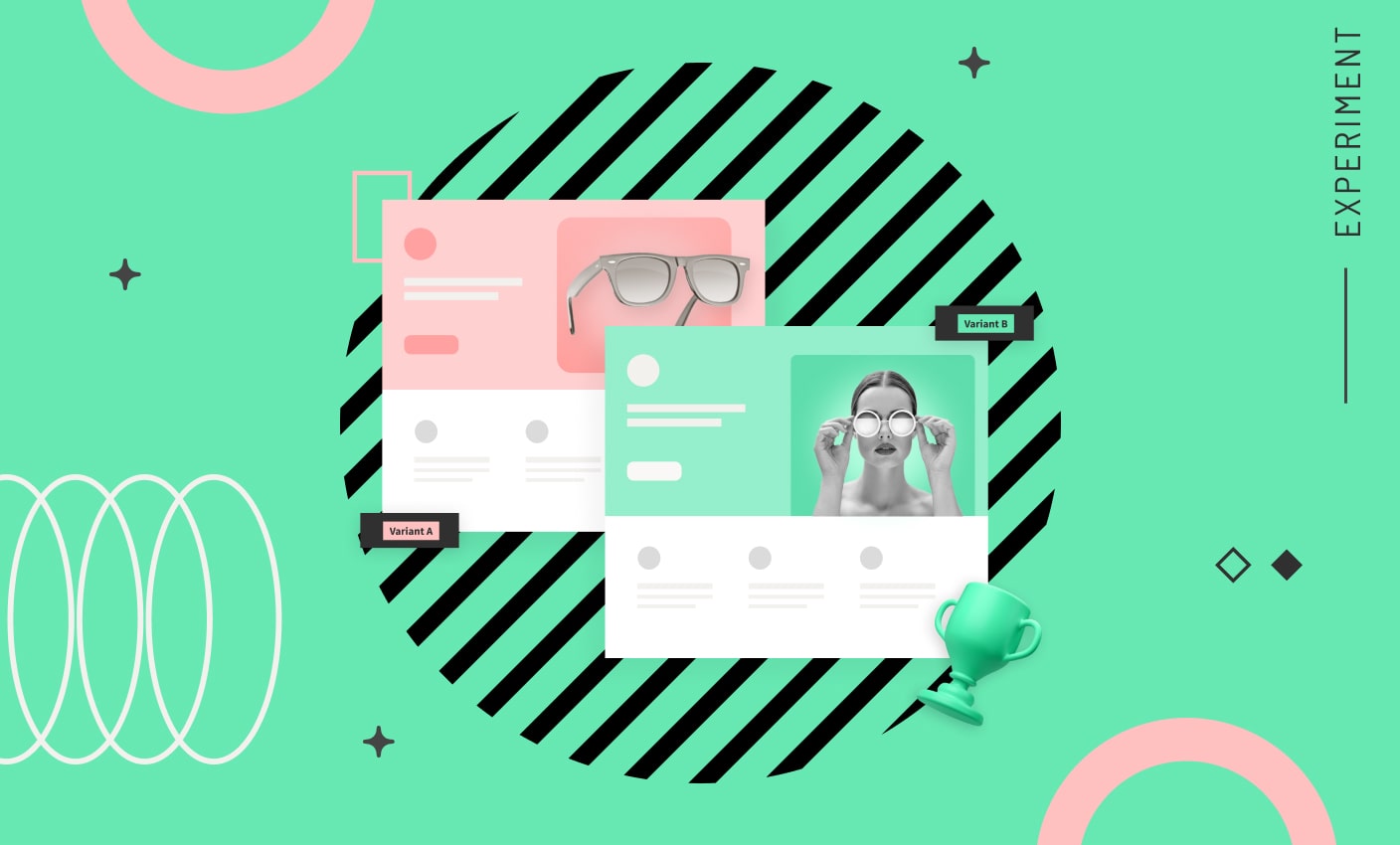

Take, as an example, travel company Going, who ran a simple A/B test around CTA phrasing that led to a 104% increase in conversions.

![[Experiment – TOFU] A/B Testing Pillar – V1 – Mar 2024](https://unbounce.com/photos/2.5_VisualCTA_AB.jpg)